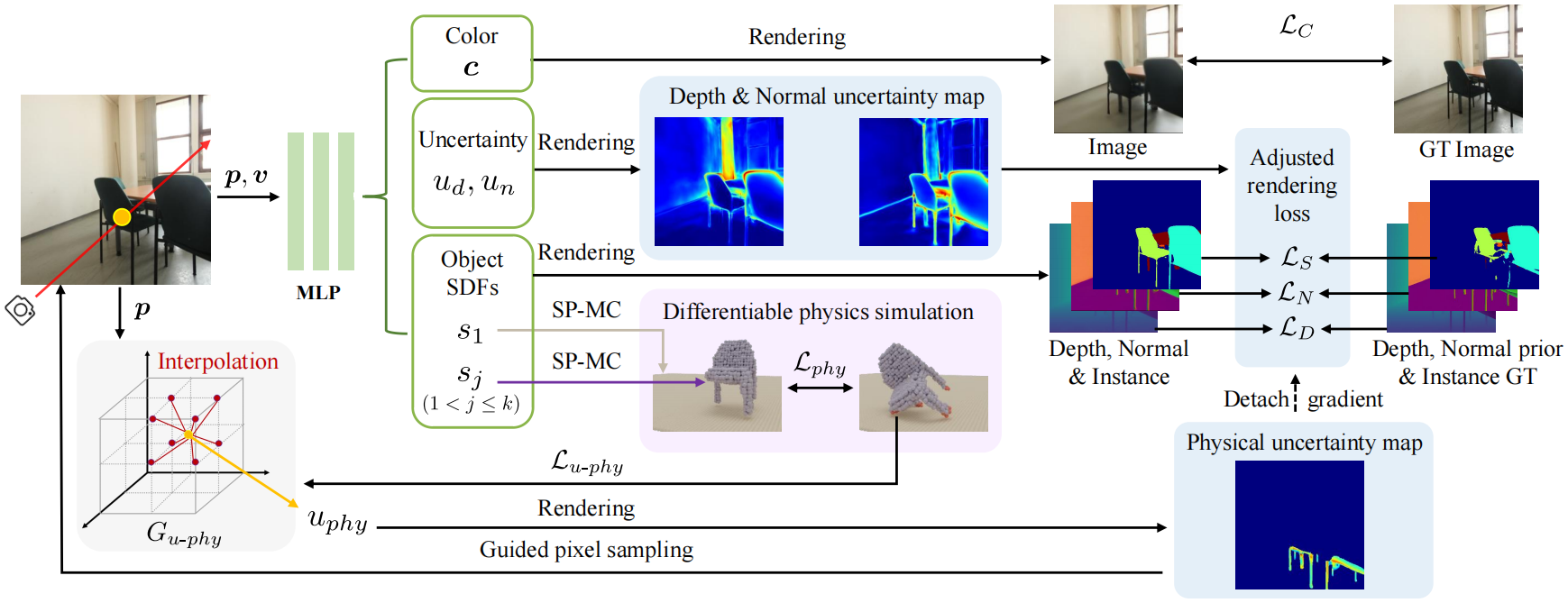

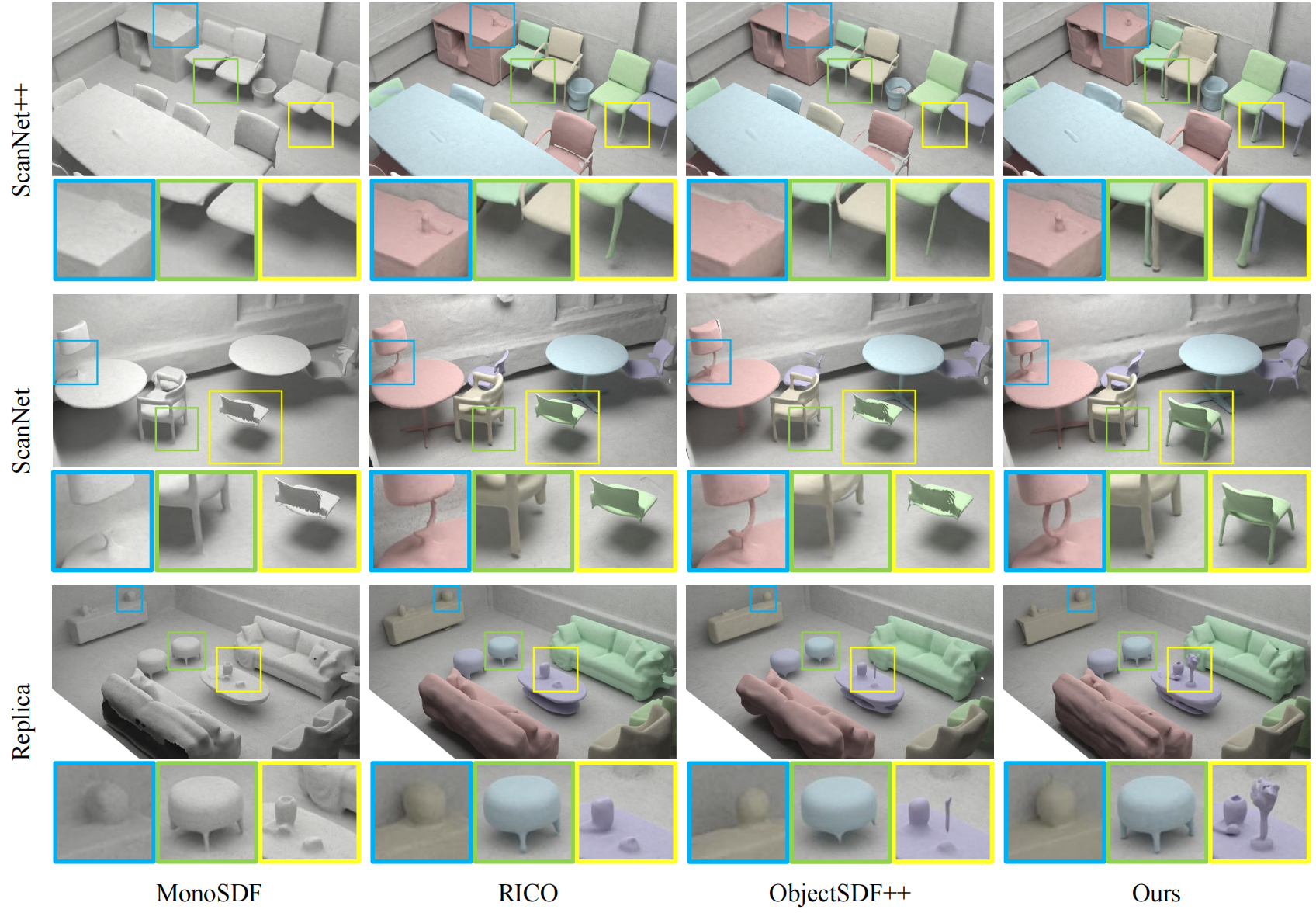

Method

Our novel framework bridges neural scene reconstruction and physics simulation to achieve the joint modeling of physics, geometry, and appearance. We realize a particle-based physical simulator and a highly efficient method for transitioning from SDF-based neural implicit representations to explicit representations that are conducive to physics simulation. Furthermore, we propose a joint uncertainty modeling approach, encompassing both rendering and physical uncertainty, to mitigate the inconsistencies and improve the reconstruction of thin structures.